Automating Noise Pollution Enforcement: Using AI to Streamline NYC's Noise Camera Enforcement Program

Written by Jonathn Chang

The New York City Department of Environment Protection (DEP) is a NYC agency responsible for managing the city’s clean and waste water systems. It is also tasked with protecting the environment and public health by regulating air and noise pollution, as well as maintaining the sprawling underground network of pipes for water and sewers.

Figure 1: Screenshot of the red-dot camera’s footage capturing a noise violation from a white Ford Mustang.

Under NYC’s noise pollution regulation, the Noise Camera Enforcement Program at DEP monitors noise pollution on busy NYC streets. The program tracks vehicles that deliberately violate established noise thresholds, e.g., ones that intentionally rev an engine, modify mufflers, or honk excessively. The program deploys nine SoundVue noise cameras from Intelligent Instruments at high-traffic intersections citywide (Local Law 7 of 2024 mandates 25 cameras by September of 2025, though underfunding has delayed that goal). Each SoundVue system consists of three to five cameras capturing various angles of the intersection, with one specially-designed “red-dot camera” that monitors the sound intensity on the street and records an event when noise exceeds a preset decibel level. The system can then triangulate the source of the noise and mark the offending vehicle with a red dot in the recorded footage to assist in identifying the vehicle and its information later.

Since the program’s launch in July 2021, around 35,000 events have been recorded, with up to 40 events on the busiest of days. DEP’s noise camera inspectors manually review the red-dot camera footage to determine if the event is actionable, pinpointing the vehicle from each of the other camera angles to extract its license plate, and then issue a summons to the vehicle owner.

How can NYC DEP utilize state-of-the-art AI and ML tools to automate the manual inspection process in their Noise Camera Enforcement Program?

Manual review is slow and repetitive. As a Siegel PiTech PhD Impact Fellow, my project focused on exploring how AI and ML methods could reduce this burden.

Figure 2: Screenshot of the red-dot camera during heavy rain and thunder. Vehicles are difficult to make out, and the red dot is triggered by a noise in the atmosphere.

A major source of inefficiency in manual inspection stems from filtering non-actionable events: many recordings would be triggered by emergency vehicle sirens (EMS/NYPD/FDNY), large truck engines, construction noises, or off-camera/highway vehicles, none of which violate noise ordinances. The challenge is to distinguish these from genuine actionable violations, such as a car or motorcycle’s muffler, or excessive and unnecessary vehicle honking.

I hypothesized that this filtering task could be accomplished in a one-shot manner by querying Google’s Gemini 2.5 Pro with the red-dot camera angle of the event alongside a carefully engineered prompt. Gemini’s advanced video and audio capabilities could simultaneously analyze the audio in the footage, consider the location of the red dot throughout the video, and note any vehicles that appear to be speeding, much like a human would inspect the event.

To confirm my hypothesis, I used a validation dataset of 1,869 previously manually-inspected events across different times of day and seasons. Gemini was able to correctly identify non-actionable events 98.5% of the time (1.5% false negative rate) and actionable events 71% of the time (29% false positive rate). The low false negative rate is particularly valuable since overlooking a true violation (false negative) is more detrimental than over-flagging a benign event (false positive). Overall, employing this model to filter events would reduce the number of manually-inspected events by approximately 51%.

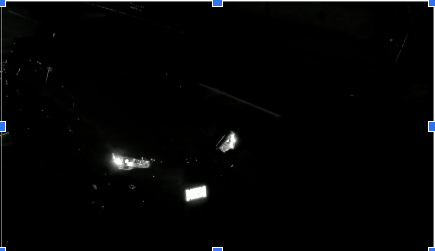

Figure 3: Screenshot of a noise camera angle at night. Due to the reflective nature of the license plate at night, the plate ID is unreadable.

Furthermore, the process of extracting the license plate’s information of the offending vehicle could be automated by making use of object detection available in LLMs. I first used YOLO to extract snapshots of each vehicle across the other camera angles, and then OpenAI’s GPT-4.1 Mini to perform Automated License Plate Recognition (ALPR) and write each vehicle’s license plate to a database. Finally, Gemini’s classification of the offending vehicle according to the red-dot camera (e.g., “white Honda Odyssey”, “red Ford Mustang”) was used to link it to the vehicle’s license plate in the database.

I tested this ALPR pipeline on 30 hand-selected events with clearly visible license plates. In 21 events, the offending vehicle was matched with Gemini’s label, but in only 9 was the license plate accurately extracted. The modest success rate reflects compounding error sources at each stage, including visual defects such as lighting, motion blur, and plate clarity.

Impact and Path Forward

Over the summer, I demonstrated the viability of AI automation for noise enforcement inspection, with the possibility of reducing the manual inspection workload by 51% while maintaining inspection quality. However, full automation remains an open challenge, especially for robust ALPR. Future work should focus on refining the ALPR pipeline with improved visual capabilities of AI, an image pre-processing step to de-noise and de-blur license plates, and improved data quality in terms of resolution, motion blur, and lighting.

Jonathn Chang

Ph.D. student, Applied Mathematics, Cornell University

Acknowledgements

This work was conducted as part of the Siegel PiTech PhD Impact Fellowship program during summer 2024. I extend my sincere gratitude to James Huang, my primary mentor at NYC DEP, whose technical expertise and guidance were instrumental in shaping this project's direction and implementation, and to the broader DEP team who provided valuable operational insights and data access. I am also grateful to the PiTech Fellowship program for providing funding support for API usage.